The most commonly used code-editor-based debugging approach is generally not very helpful in the case of Durable Functions as it makes issue tracking difficult. This article helps to analyze a durable orchestration using Azure Storage Tables. Also, the blog will help you write an efficient workflow.

Note: This blog assumes you are familiar with Durable Functions, Task Hub, and Storage Explorer. Also, assuming Azure Table Storage is the back-end data store and C# is used for coding. There may be slight differences in case you are using another storage mechanism or language.

Durable Functions:

The Durable Functions are great for running stateful workflows using Orchestration and Azure Functions. Durable Functions use the Durable Task Framework.

Durable Task Framework:

It uses event sourcing to manage the state, checkpoints, and replays of orchestrations. As the function's state is logged, it is easier to track down what happened during execution.

How Durable Orchestration works?

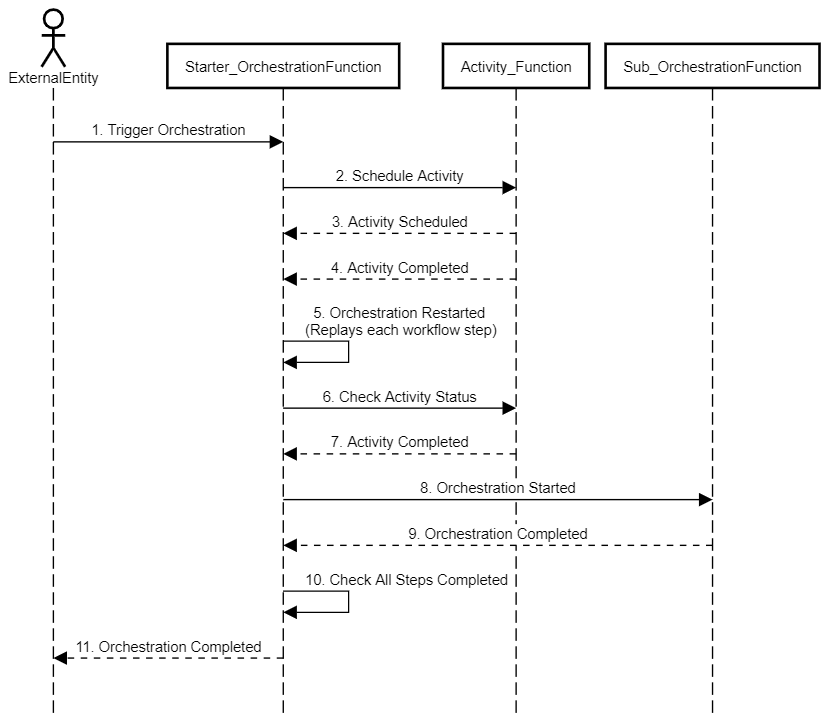

The orchestration functions have the sole responsibility of defining the workflow. It delegates workflow steps to the Activity Function. The function with the DurableOrchestrationClient hook starts the orchestration. The orchestrator function is invoked on starting a new workflow, schedules the first activity, and sleeps. When an activity function task completes, the orchestration function is activated. The workflow execution restarts and the next activity gets scheduled.

Events such as Orchestration Started, Activity Scheduled, Activity Completed, Orchestrator Completed etc. are stored in the Storage Table. When the orchestration wakes up, it replays the workflow from the beginning though it does not rerun the already completed activity function. Instead, it checks the input for the activity function against a table with the same input and execution Id. If the entry is present, it gets the output from the table and continues execution. Hence, debugging becomes more straightforward if we know how the orchestration events and instances are tracked in tables.

The diagram below shows the execution of the durable orchestration.

How to read Azure storage task hub tables?

We use hubName in host.json to configure the event execution tracking tables. We will have two tables in Table Storage. In our case, those tables are DurableHubInstance and DurableHubHistory . We added the Hub word in the table name for easy identification.

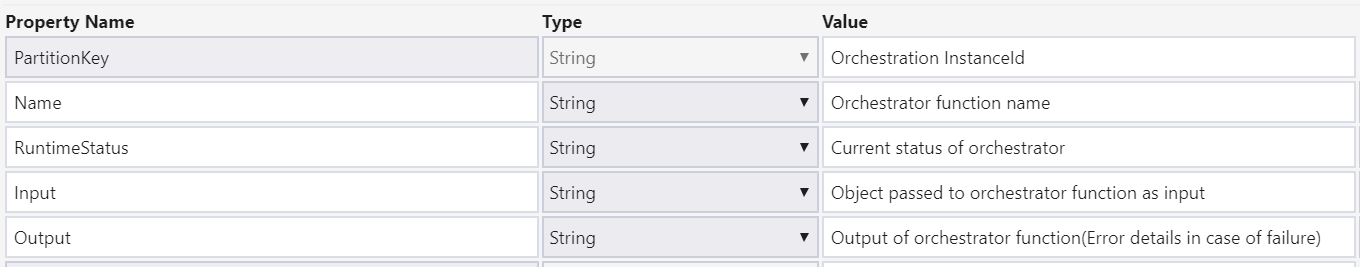

DurableHubInstance:

It stores the orchestrator instance runtime status. Each row in this table represents a new instance of the orchestrator. Below are a few columns which we will be using while tracing errors.

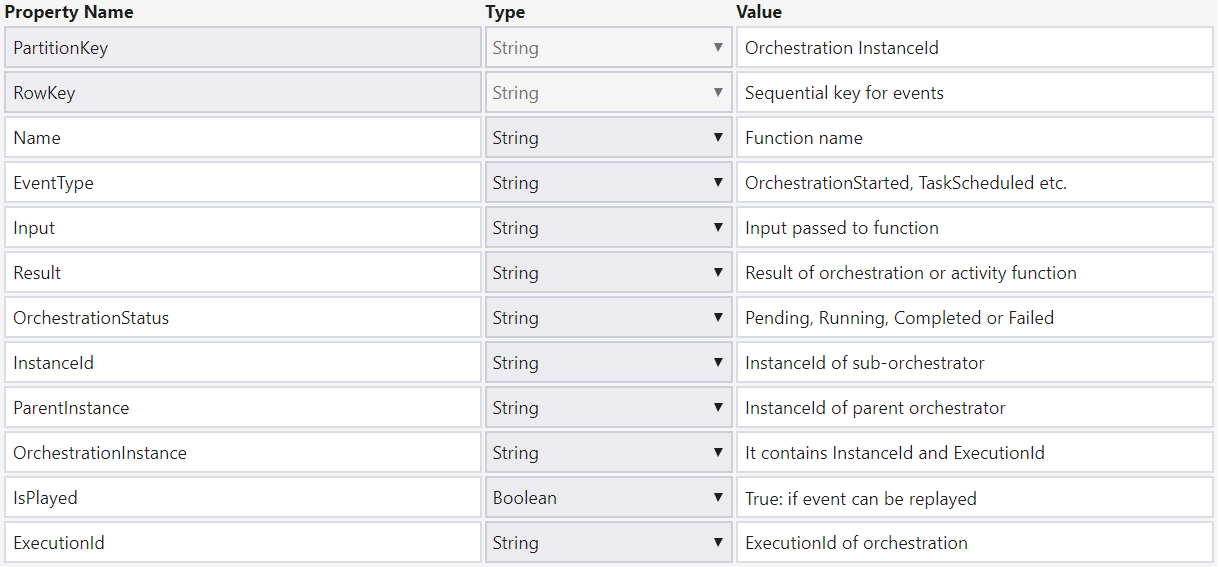

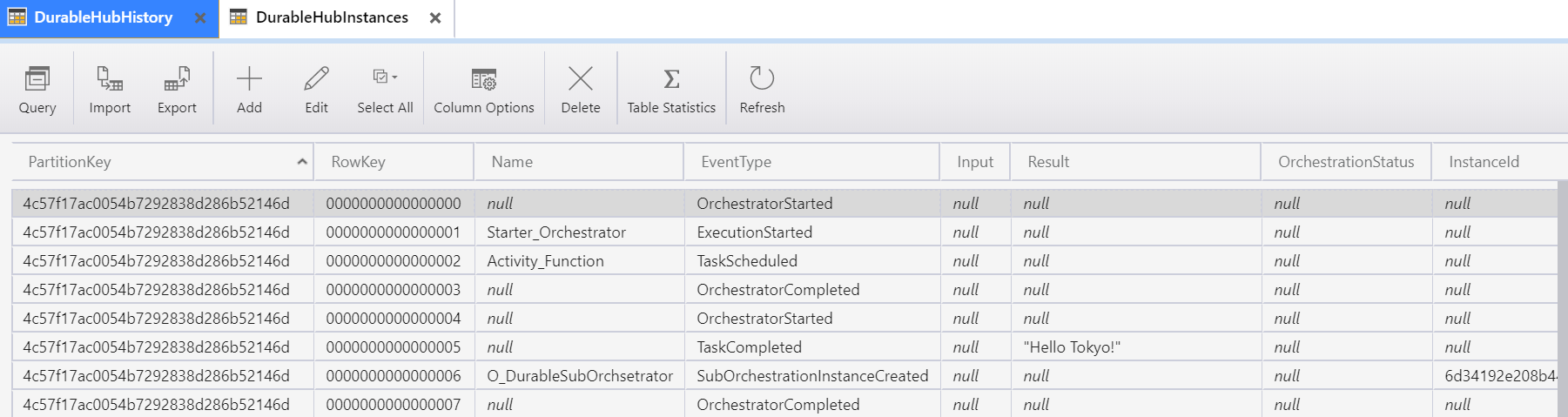

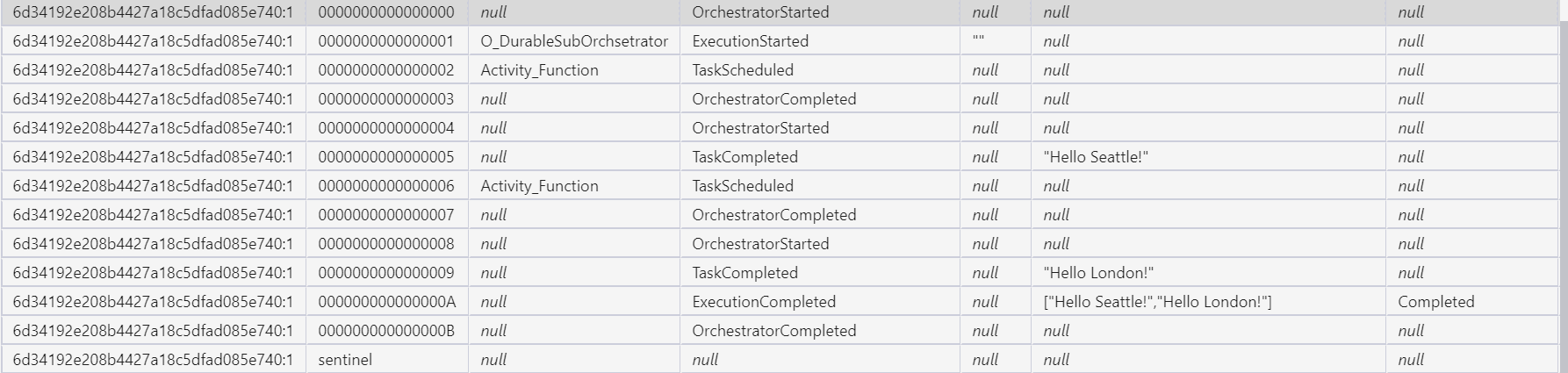

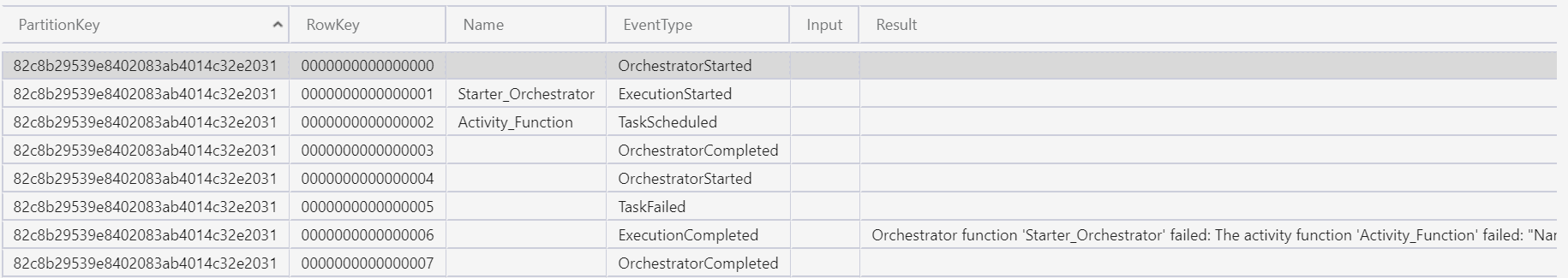

DurableHubHistory:

It contains a record of historical events related to the orchestrator. The PartitionKey is the same as the orchestration InstanceId, and the RowKey is the sequential key generated for the event. Here you can see several columns to help you identify the root cause of the orchestration failure.

Let's consider the below example of durable orchestration:

namespace DurableFunctionDemo

{

public static class DurableFunctionDemo

{

[FunctionName("Starter_DurableDemo")]

public static async Task<HttpResponseMessage> Starter_DurableDemo(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", "post")] HttpRequestMessage req,

[DurableClient] IDurableOrchestrationClient starter,

ILogger log)

{

string instanceId = await starter.StartNewAsync("Starter_Orchestrator", "");

log.LogInformation($"Started orchestration with ID = '{instanceId}'.");

return starter.CreateCheckStatusResponse(req, instanceId);

}

[FunctionName("Starter_Orchestrator")]

public static async Task<List<string>> RunOrchestrator(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var outputs = new List<string>();

// Call Activity Function

outputs.Add(await context.CallActivityAsync<string>("Activity_Function", "Tokyo"));

// Call Orchestrator Function

var op = await context.CallSubOrchestratorAsync<List<string>>("O_DurableSubOrchsetrator","");

op.ForEach(x => outputs.Add(x));

return outputs;

}

[FunctionName("Activity_Function")]

public static string SayHello([ActivityTrigger] string name, ILogger log)

{

log.LogInformation($"Saying hello to {name}.");

//This exception will get logged into instance and history table

//if (name == "Tokyo")

//{

// throw new Exception("Name not valid");

//}

return $"Hello {name}!";

}

[FunctionName("O_DurableSubOrchsetrator")]

public static async Task<List<string>> DurableSubOrchestrator(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var outputs = new List<string>();

outputs.Add(await context.CallActivityAsync<string>("Activity_Function", "Seattle"));

outputs.Add(await context.CallActivityAsync<string>("Activity_Function", "London"));

return outputs;

}

}

}Execution of the orchestration begins with the Starter_DurableDemo, that calls Starter_Orchestrator. Then Starter_Orchestrator function calls Activity_Function and O_DurableSubOrchestrator. On the execution of Activity_Function, two events, namely TaskScheduled and TaskCompleted, get added to the history table. When the sub-orchestrator is called, SubOrchestrationInstanceCreated event is added to the HubHistory table, and an instance entry is made in the HubInstance table.

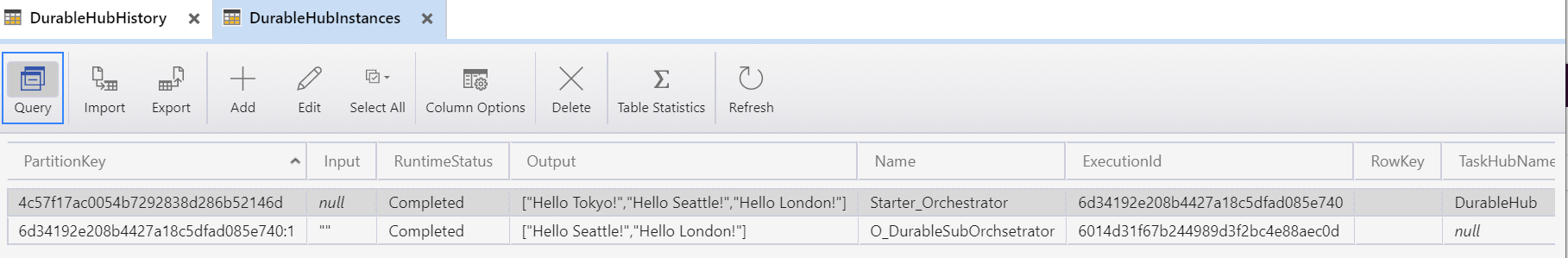

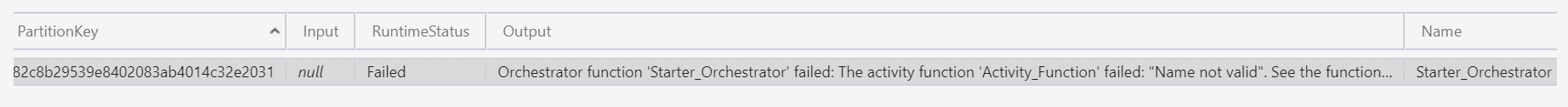

Below is a snippet of the HubInstance table. PartitionKey represents the InstanceId of the Starter_Orchestrator and O_DurableSubOrchestrator functions. You can use InstanceId to query the history table.

If the Activity / SubOrchestrator fails, the output of the orchestration instance will contain the details of the failure. The details include the name of the failed function. You can use the InstanceId and function name to get the exact error from the history table.

Things to remember:

- Since there is no compile-time type checking while calling activity or orchestrator function, specify the same return type as defined in the function definition.

- If an exception occurs, which

Activity/SubOrchestratorsdo not handle, it returns to the orchestration function, updating its state with an error. To avoid a complete failure of your orchestration, you should handle exceptions inActivity/SubOrchestratorsand write compensation logic for them. - Activity and Orchestrator functions are typical Azure functions with a maximum timeout (To know more about hosting options and function timeouts, you can refer to this link). So even if a Durable Orchestration workflow is long-running, the individual functions are still time-constrained.

- If your orchestration has a long list of items to process, processing the items in the list will cause performance issues, because it loads previous historical events into memory for each item. To work around this, you can use

ContinueAsNewon theIDurableOrchestrationContext. This method truncates the saved history of the orchestration instance and restarts the orchestration.

I hope some of these tips will help you debug and write an efficient durable orchestration workflow.