Test Automation for regression test cases has become an important part of UAT. To draw the most benefits from automation suite, it is pertinent that test reports are easy to read. Test reports should provide complete details of automation suite execution. We have a selenium- based automation suite with TestNG framework for our project. We have used Extent Report for test execution status reporting in our automation. In this article, we will explain the steps to capture the details of test functions skipped in the report.

Background

We have designed automation suite to cover the user journeys in the application. There are instances where data generated from one module feeds into the other module. In such cases, if the first module test case was not executed as expected, then it impacts the second module. Since our business flows are complex, there are many interdependent test functions. In our automation, the failure in module one skips the dependent modules. Thus, despite the automation run, the product health status is not very clear. Also, a lot of time gets invested in anlysing the test run, and in taking remediation steps to ensure proper bug reporting, and test coverage.

Problem

There's a separate class called Utility which has functions for report generation and report flush. Below is the code for report generation:

public static ExtentHtmlReporter htmlReporter;

public static ExtentReports reports;

public static ExtentTest logger;

/**

* This function is to generate report of a test method which is passed as a

* parameter

*/

public static void reportCreation(Method method, String reportName) {

htmlReporter = new ExtentHtmlReporter("Reports/" + reportName + ".html");

reports = new ExtentReports();

htmlReporter.setAppendExisting(true);

reports.attachReporter(htmlReporter);

logger = reports.createTest(method.getAnnotation(Test.class).description());

}And below code is for report flush:

/**

* Function to flush report after all test functions are run

*/

public static void finishReportAfterTest(ITestResult result, Method method) throws IOException {

if (result.getStatus() == ITestResult.SUCCESS) {

logger.log(Status.PASS, method.getAnnotation(Test.class).description());

} else if (result.getStatus() == ITestResult.FAILURE) {

logger.log(Status.FAIL, result.getThrowable().getClass().getName());

logger.addScreenCaptureFromPath(

Utility.captureScreenshot(Configurations.driver, "ScreenShot" + method.getName()));

} else if (result.getStatus() == ITestResult.SKIP) {

logger.log(Status.SKIP, method.getAnnotation(Test.class).description());

}

reports.flush();

}

We have invoked the above two functions in our test class. reportCreation in @BeforeMethod and finishReportAfterTest in @AfterMethod. Below is the sample test class code:

public class UnitTest {

@BeforeMethod

public void beforeMethod(Method method) throws Exception {

Utility.reportCreation(method, "UnitTest");

}

@Test(description = "First Test Function")

public void testFunctionOne() {

assertEquals(true, false);

}

@Test(description = "Second Test Function", dependsOnMethods = { "testFunctionOne" })

public void testFunctionTwo() {

Utility.logger.log(Status.INFO, "Second Test Function");

}

@Test(description = "Third Test Function")

public void testFunctionThree() {

Utility.logger.log(Status.INFO, "Third Test Function");

}

@AfterMethod

public void afterMethod(ITestResult result, Method method) throws IOException {

Utility.finishReportAfterTest(result, method);

}

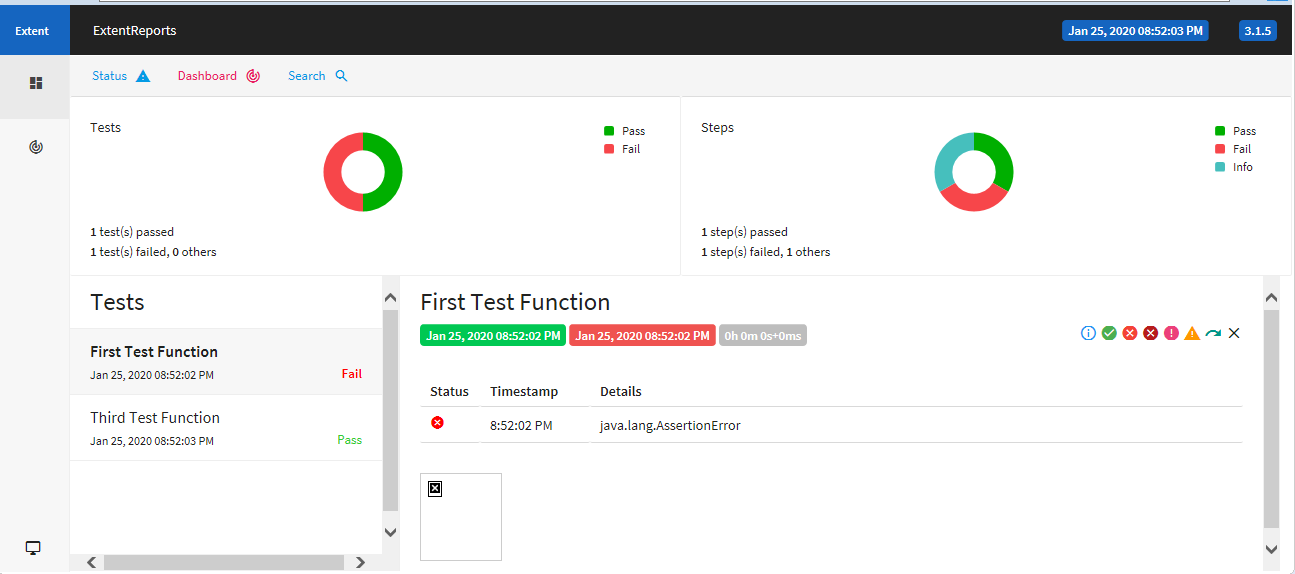

}testFunctionOne has an assertion failure. This will skip testFunctionTwo as testFunctionTwo depends on testFunctionOne. We expected whether test function's status is Pass, Fail, or Skip, the report will capture it. But after executing the test class, we observed that the report has not captured the skipped test. The report looks as shown in the below image:

Reason

Test class executes @BeforeMethod and @AfterMethod only when test function has run. Hence, the skipped test function is not captured in the report. This made our test report analysis process time consuming. To overcome this, we explored the below option which provides us more accurate status of test run.

Solution

Here ITestListener comes into picture. We made a small modification in our framework and implemented ITestListener. We created a separate class called TestListeners which implements ITestListener. Below is the code:

public class TestListeners implements ITestListener

{

@Override

public void onTestStart(ITestResult result) {

}

@Override

public void onTestSuccess(ITestResult result) {

}

@Override

public void onTestFailure(ITestResult result) {

}

@Override

public void onTestSkipped(ITestResult result) {

Utility.logger = Utility.reports.createTest(result.getMethod().getDescription());

Utility.logger.log(Status.SKIP, result.getMethod().getDescription());

Utility.reports.flush();

}

@Override

public void onTestFailedButWithinSuccessPercentage(ITestResult result) {

}

@Override

public void onStart(ITestContext context) {

}

@Override

public void onFinish(ITestContext context) {

}

}We added @Listeners annotation for TestListeners class in our test class. Below is the code:

@Listeners(TestListeners.class)

public class UnitTest {

@BeforeMethod

public void beforeMethod(Method method) throws Exception {

Utility.reportCreation(method, "UnitTest");

}

@Test(description = "First Test Function")

public void testFunctionOne() {

assertEquals(true, false);

}

@Test(description = "Second Test Function", dependsOnMethods = { "testFunctionOne" })

public void testFunctionTwo() {

Utility.logger.log(Status.INFO, "Second Test Function");

}

@Test(description = "Third Test Function")

public void testFunctionThree() {

Utility.logger.log(Status.INFO, "Third Test Function");

}

@AfterMethod

public void afterMethod(ITestResult result, Method method) throws IOException {

Utility.finishReportAfterTest(result, method);

}

}

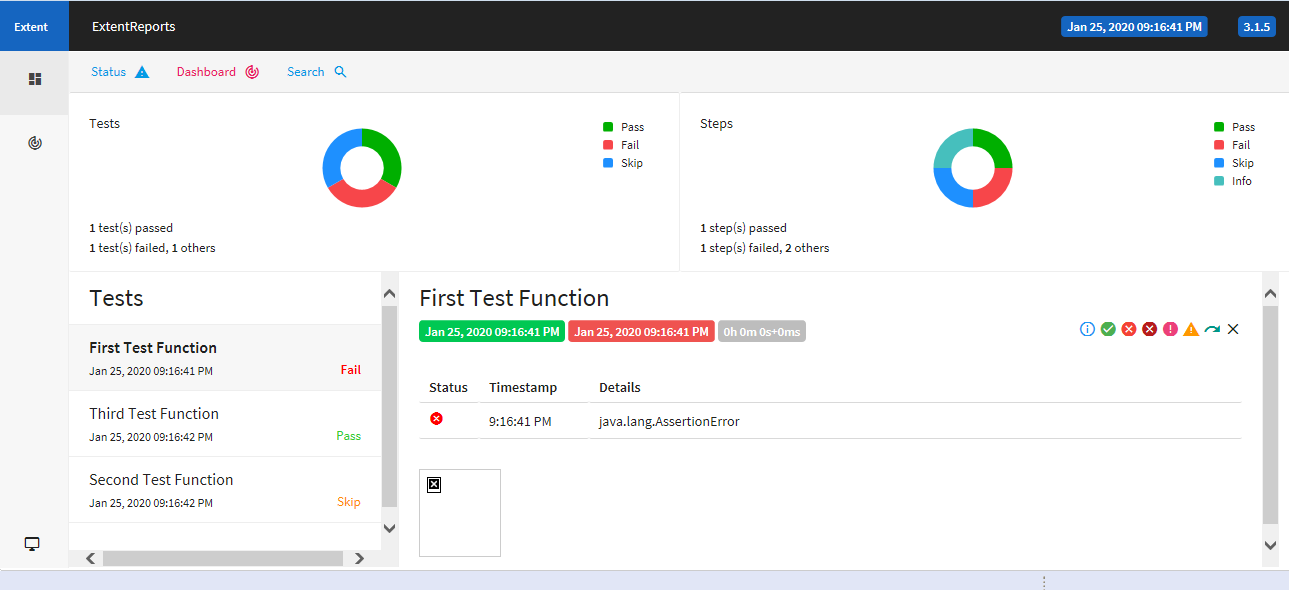

Here is a sample test report with the skipped test function captured:

With this ITestListener implementation, we are able to see all the statuses in the report. Since this captures the skipped tests, it saves our test report analysis time.

Thank you for reading.